Start Date: 27/09/16

Tutor: James, Tedder.

Student: Wyatt, Chapman

Unit 66 & 67

--------------------------------------------------------------------------------------------------------------------------

3D Modelling.

The process of creating a biological being, or just a simple stool all starts with one shape, one object. With said object, you create another, and either weld, cut, duplicate, scale, rotate and more, to create an entirely new entity. As you begin to add more and more, the simple shape begins to take shape, forming into the ideal image you desire. 3D modelling has a wide array of creativity. Programs like Maya even allow you to animate your object, once you're satisfied with the results.

Many media types use 3D modelling; TV, games, films, products, websites, animations, and architectural designers. Each has a different purpose, and way of portraying its model.

3D Modelling Software:

- AutoDesk Maya - Maya has the most variety. It's used in films, TV, games, and architecture.

The software has been used for many cinematic movies, ones such as; Monster inc, The Matrix, Spider-Man, The Girl with the Dragon Tattoo, Avatar, Finding Nemo, Up, Hugo, Rango, and Frozen.

Users that are students, or teachers may get a free educational copy of Maya from the AutoDesk Education community. The version that are in the community are only licensed for non-commercial use only. Users will get a full 36 month license. When the license expires, users may go to the community to request a new 36 months license.

- AutoCAD - AutoCAD was first released in December 1982 for PC and later in 2010 for mobile. It's a commercial software application for 2D and 3D computer-aided design and drafting.

- 3D-Coat - 3D-Coat is a commercial digital sculpting software created by Pilgway. The software was developed to create free-form organic and hard surfaced 3D models from scratch. It comes with tools that allow the users to sculpt, add polygons, create UV maps, and once complete, texture the resulting models with a natural painting tool and render static images.

- Houdini - Houdini is a 3D animation software developed by Side Effects Software in Toronto. Side Effects made Houdini from the PRISMS suite of procedural generation software tools.

Houdini has been in afew animation productions, which include films like Frozen, Zootopia, Rio, and Ant Bully.

For those of you that dont want to pay for ths software, Side Effects Software published a limited version called Houdini Apprentice, which is free for non-commercial use.

Gaming/Polygons - Round about everything to do with gaming in this present day, uses some form of 3D modelling software, many use the ones above but, the most common one to use is Maya. Although there is software for this type of stuff, game engines are also have their own 3D modelling tool kit, that comes with the software. Unreal Engine 4 has it's very own 3D modelling kit and animation set.

Making models for games is extremely time consuming, tedious, and requires a solid state of mind. There is also the problem with polygon budget. In a game you want the least amount of polygons you can possible have for the game, because it's rendering thousands of assets in real-time, it can take up alot of processing power. There isn't really a budget, more of a limitation to modelling for a game.

Every model made has sub divisions that are placed on the object. You can add more sub divisions/polycount, or subtract. Each one is editable, by selecting whether you want to select the "face" (Section of the sub division), edges, vertex etc. Adding more sub divisions/polycount means the higher toll it will have on your computer because it will have to process more.

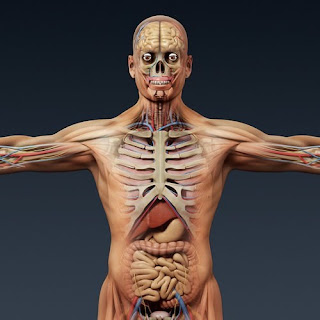

As you can see in the image above, this character has more than 100 sub divisions/polygons, and each one has been edited near perfect to create this model. Creating just the head alone would have taken a good couple hours. The more polygons you have, the longer it takes for the system to render it.

It's important to know how many polygons you will have, so that the player can identify what the object is but, you don't want to add too many polygons, and slow down the rendering time. The best way to do this, is to look at an object silhouettes and notice what details are needed to distinguish it from other objects. The interior detail of the object can come afterwards, since you can make 2D textures to makeup for the limit of polygons. Of-course some interior details require it to be 3D but there shouldn't be enough to impact the rendering time.

Developers should almost always remove unseen polygons, since most the time, the player wont see the inside of an object, making it a waste of processing power.

It's best to make all your models in triangle polygons or quad polygons. Developers should do this because when you import your model into the game engine, or export the model from the 3D software, the polygons will triangulate for easier calculations when rendering.

By keeping your polygons as quads or tri (triangles) you minimise the chance of the system making your model more complex than needed.

Having a quad triangulate isn't such a large issue, since i can only triangulate a very limited amount of ways.

Once a polygon starts to have more than 4 sides, things get complicated. As we can see from the Pentagon below. It has more ways to triangulate.

Every vertex on the polygon is completely separate object. Since the quad only had 4 simple edges, there were only two ways it could triangulated but, a pentagon has five edges placed in unique locations. This means it has up to six ways to triangulate, meaning it will take longer to render in-game.

Lastly. LODs (Level Of Detail) for models. LOD models are great for rendering because when the model is seen from far away, the developer can reduce the detail used for a model, decreasing its process consumption.

LOD models are created by giving the object multiple models of different complexities depending on how far away the model is from the camera.

When creating a LOD model you must take into account that the object shouldn't look high quality. Instead you should go for the lowest quality possible, with the object still retaining it's resemblance to when seen up close. The model will be low quality when from afar, so you can have more models in one level without making the game choppy.

Film - Every film that uses 3D modelling/CGI will pre-render the model. By pre-rendering the model/scene, you are able to add almost and unlimited amount of polygons to a model. What this means is that the level of detail the designers can go into is unbelievable. This has a down-side to it though. It will increase the rendering time dramatically. It could take hours to render just one frame.

In "Monsters University" it took up to 29 hours to render a single frame. The amount of detail and quality could never be put into real-time because it would take hours, or minutes for you to just be able to move one leg, 3 inches.

From the image above we can see that everything was made in a 3D modelling program, each little detail, from the paper on the clipboard, to the screws on the door. Think. This is just one part sectioned off from the rest of the scene. The rest of the scene is also made entirely from 3D modelling. Could you imagine trying to render all of those polygons in real-time?

Animation - Before the 3D model can be animated it needs to have joints and control handles so the animators can pose the model. This is also known as "rigging".

Rigging, is when the riggers put a digital skeleton into a 3D model. Just as you would expect, the skeleton is made up of joints and "bones". The animators can use this skeleton to edit the pose of the 3D model. Simple poses can take hours, and creating animations/poses on par with high quality studios can take days or weeks.

There will be a root joint when setting up the skeleton. This will have every joint of the skeleton connected to it, either directly or indirectly. This is called "Joint Hierarchy".

There are two different rigging techniques:

Forward Kinematics (FK) - "one of two basic ways to calculate the joint movement of a fully rigged character. When using FK rigging, a any given joint can only affect parts of the skeleton that fall below it on the joint hierarchy."

Inverse Kinematics (IK) - "the reverse process from forward kinematics, and is often used as an efficient solution for rigging a character's arms and legs. With an IK rig, the terminating joint is directly placed by the animator, while the joints above it on the hierarchy are automatically interpolated by the software."

Rigging Info Source

Character animation is the process of giving life to a static model. Expressing thought, emotion and personality.

Creature animation is pretty much the same principles as character animation, only giving expression of thought, emotion, and personality to animals, fantasy creatures, or prehistoric animals.

Visual effect animation is, again, pretty much the same. Only the developer/animator animates rain, clouds, water, lightning and other natural effects but, they also animate non-natural effects.

3D Modelling used in Architectural Design - Alot of architects use different 3D modelling software, such as "SketchUp" "Rhino3D" etc. for the earliest versions of their creations.When their concept is completed they tend to use tools like ArchiCAD or Revit to make building simulations. Some architects use CNC machines to print out a 3D model, but doing it this way requires far more precision from the designer.

Education - As of late, 3D modelling in schools is slowly becoming a main tool for teaching students. Not only does it give students enthusiasm for the lesson, but it can help them better understand the task at hand.

When students study medicine, they will often be presented with 3D models of the human body's anatomy created from software, like Maya. In doing this people can visualise, and perceive the human body better than if the teacher had supplied them with a 2D illustration. Teachers can even get an animated model. This way, students can observe how the body functions in greater detail.

File Size - Polygon count as mentioned before, has a dramatic impact on how a game runs, but when exporting a file, or downloading one, a high polygon count also has a high impact on download speed, or the website you're uploading to might have a file size limit.

Fixing this is pretty simple in words. Just use larger and less polygons. That isn't quite right though. One of the most challenging tasks as a designer is getting a balance of detail with smooth surfaces, but also keeping a small file size.

Rendering Time - There are different types of rendering, like wireframe rendering, using polygon-based rendering, or more advanced types like scanline rendering, ray tracing, or radiosity. Rendering can be super time consuming and take up days or weeks, but it can also take up to a fraction of a second.

Real-Time Rendering - Real-time rendering is most commonly used in games or simulations, that are ran at from 20 frames to 120 frames per second (fps). Real-time rendering is the process of showing as much data as possible in one frame. Making it seem as photo-realistic at an acceptable amount of frames.

Non Real-Time - This is used in films or videos. Rendering in non real-time sacrifices processing time to gain a higher quality image. For films rendering just one frame can take days to render. Frame rates for a film are usually played at 30 frames, but can be played in 24 or 25 frames per second.

Radiosity - Radiosity is classed as a global illumination algorithm because the light arriving on the surface isn't just from the light sources, but also from other surfaces that are reflecting the light.

Including radiosity in rendering, adds realism to the finished scene, because of the way it copies real world illumination.

If you were to use radiosity however, the light outside the window would light up the floor, which would then reflect onto the walls so on and forth, but it would do it in a way so that depending on the material it could reflect the light to other areas, slightly or incredibly dimming the reflected light source to other areas.